昨年友人より, 某コワーキングスペースの混雑度を見える化したいので, 機械学習部分を手伝ってほしいとの依頼があった.

そこで, 混雑度を測るために, 各スペースの人物検出を行い, 定員に対してどの程度の人がいるか検知することにした.

関連記事:

・コワーキングスペースの「混雑度」を検出する(1)

・コワーキングスペースの「混雑度」を検出する(2)

・コワーキングスペースの「混雑度」を検出する(3)

・コワーキングスペースの「混雑度」を検出する(4)

最初, 手元にあったJetson Nano 4GB版で人物検出を動かして, そのイメージを使ってJetson Nano 2GBを動かそうとしたがダメだった.

Jetson Nano 2GB版のSD Imageは4GB版とは異なっており, 以下はJetson Nano 2GB版で人物検出を動かした際のメモ.

1. 環境構築

1.1 SDイメージ書き込み[1]

ここを参照して, SDカードイメージを書き込めば問題ない.

(注1) Jetson Nano 4GB版と2GB版では, それぞれベースとなるSDイメージが異なるので注意.

(注2) SDカードは, SDXC UHS-1 U3 V30 A2あたりのスピードの速いものを使用すべし.

1.2 Jetson Toolsのインストール[2]

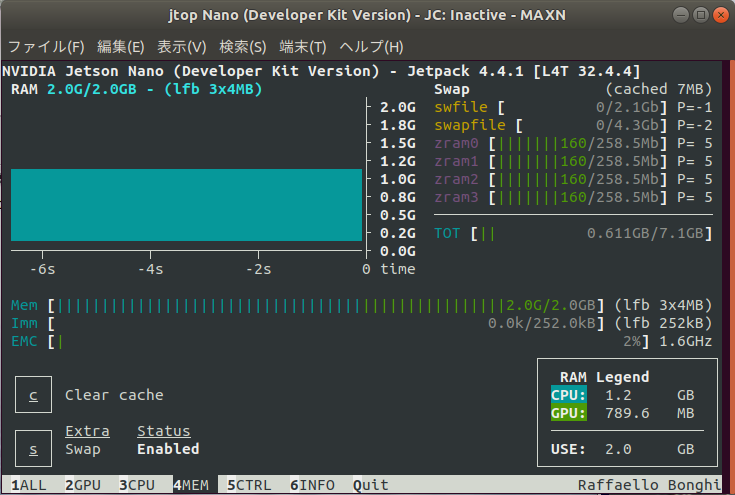

まずは, Jetson Nanoの負荷状況(CPU/GPU/Memory, etc)を確認するために, jetson-statsをインストールした.

jtopの使い方はここを参照のこと.

user@user-desktop:~$ sudo apt-get install python3-pip user@user-desktop:~$ sudo -H pip3 install jetson-stats

1.3 TensorFlow/Kerasのインストール[3]

TensorFlowは, 基本的には ここを参照してインストールすればよい.

ただ, 今回は, 最新のTensorFlowではなく, "1.15.x"が入れたかったので, 以下のように指定した.

user@user-desktop:~$ sudo pip3 install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v44 tensorflow==1.15.4

(注) 2021/1時点では2GB版のSDイメージ内のJetPackのバージョンは4.4.1

また, 今回はssd_kerasを使用するので, TensorFlow 1.15.xに対応しているKerasは"2.2.4"をインストールした.

user@user-desktop:~$ sudo pip3 install keras==2.2.4

1.4 GUI環境の無効

メモリが2GBしかないので, GUI環境を無効にして, CUI環境にする.

user@user-desktop:~$ systemctl get-default graphical.target user@user-desktop:~$ sudo systemctl set-default multi-user.target Removed /etc/systemd/system/default.target. Created symlink /etc/systemd/system/default.target → /lib/systemd/system/multi-user.target. user@user-desktop:~$ sudo reboot

[Before]

user@user-desktop:~$ free -h

total used free shared buff/cache available

Mem: 1.9G 417M 1.1G 27M 438M 1.4G

Swap: 7.0G 0B 7.0G[After]

user@user-desktop:~$ free -h

total used free shared buff/cache available

Mem: 1.9G 225M 1.4G 18M 326M 1.6G

Swap: 7.0G 0B 7.0G

2. 人物検出の動作確認

2. 1 まずは動かしてみる

先の人物検出プログラムのOpenCVにるGUI表示部分をコメントアウトして, GPUを使用するのでGPUオプションを追加して動作させてみる.

[GPUオプション]

import tensorflow as tf from keras.backend import tensorflow_backend config = tf.ConfigProto() config.gpu_options.allow_growth = True session = tf.Session(config=config) tensorflow_backend.set_session(session)

しかし, 下記のようなエラーが....

(省略)

2021-01-15 23:20:25.295710: I tensorflow/core/common_runtime/bfc_allocator.cc:905] InUse at 0xf00e10000 next 18446744073709551615 of size 4194304

2021-01-15 23:20:25.295743: I tensorflow/core/common_runtime/bfc_allocator.cc:898] Next region of size 4198400

2021-01-15 23:20:25.295778: I tensorflow/core/common_runtime/bfc_allocator.cc:905] InUse at 0xf01210000 next 18446744073709551615 of size 4198400

2021-01-15 23:20:25.295812: I tensorflow/core/common_runtime/bfc_allocator.cc:914] Summary of in-use Chunks by size:

2021-01-15 23:20:25.295852: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 15 Chunks of size 256 totalling 3.8KiB

2021-01-15 23:20:25.295894: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 512 totalling 512B

2021-01-15 23:20:25.295955: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 1024 totalling 1.0KiB

2021-01-15 23:20:25.296003: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 1280 totalling 1.2KiB

2021-01-15 23:20:25.296052: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 2 Chunks of size 2048 totalling 4.0KiB

2021-01-15 23:20:25.296102: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 4096 totalling 4.0KiB

2021-01-15 23:20:25.296155: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 2 Chunks of size 131072 totalling 256.0KiB

2021-01-15 23:20:25.296210: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 262144 totalling 256.0KiB

2021-01-15 23:20:25.296266: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 771840 totalling 753.8KiB

2021-01-15 23:20:25.296323: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 786176 totalling 767.8KiB

2021-01-15 23:20:25.296379: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 1048576 totalling 1.00MiB

2021-01-15 23:20:25.296435: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 4194304 totalling 4.00MiB

2021-01-15 23:20:25.296490: I tensorflow/core/common_runtime/bfc_allocator.cc:917] 1 Chunks of size 4198400 totalling 4.00MiB

2021-01-15 23:20:25.296546: I tensorflow/core/common_runtime/bfc_allocator.cc:921] Sum Total of in-use chunks: 11.00MiB

2021-01-15 23:20:25.296598: I tensorflow/core/common_runtime/bfc_allocator.cc:923] total_region_allocated_bytes_: 11538432 memory_limit_: 11538432 available bytes: 0 curr_region_allocation_bytes_: 16777216

2021-01-15 23:20:25.296660: I tensorflow/core/common_runtime/bfc_allocator.cc:929] Stats:

Limit: 11538432

InUse: 11538432

MaxInUse: 11538432

NumAllocs: 29

MaxAllocSize: 4198400

2021-01-15 23:20:25.296721: W tensorflow/core/common_runtime/bfc_allocator.cc:424] ********x****************xx*********************xxxxxxxxxxxxxxx**********************xxxxxxxxxxxxxxx

2021-01-15 23:20:25.296818: W tensorflow/core/framework/op_kernel.cc:1651] OP_REQUIRES failed at random_op.cc:76 : Resource exhausted: OOM when allocating tensor with shape[3,3,1024,12] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

conv9_2_mbox_priorbox_reshape[0][

__________________________________________________________________________________________________

predictions (Concatenate) (None, 8732, 14) 0 mbox_conf_softmax[0][0]

mbox_loc[0][0]

mbox_priorbox[0][0]

__________________________________________________________________________________________________

decoded_predictions (DecodeDete (None, <tf.Tensor 't 0 predictions[0][0]

==================================================================================================

Total params: 23,745,908

Trainable params: 23,745,908

Non-trainable params: 0

__________________________________________________________________________________________________

None

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1365, in _do_call

return fn(*args)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1350, in _run_fn

target_list, run_metadata)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1443, in _call_tf_sessionrun

run_metadata)

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[3,3,512,512] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node conv4_2/truncated_normal/TruncatedNormal}}]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "recog_nocv.py", line 97, in <module>

load_model()

File "recog_nocv.py", line 60, in load_model

model.load_weights(weights_path, by_name=True)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/network.py", line 1163, in load_weights

reshape=reshape)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/saving.py", line 1154, in load_weights_from_hdf5_group_by_name

K.batch_set_value(weight_value_tuples)

File "/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py", line 2470, in batch_set_value

get_session().run(assign_ops, feed_dict=feed_dict)

File "/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py", line 206, in get_session

session.run(tf.variables_initializer(uninitialized_vars))

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 956, in run

run_metadata_ptr)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1180, in _run

feed_dict_tensor, options, run_metadata)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1359, in _do_run

run_metadata)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1384, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[3,3,512,512] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[node conv4_2/truncated_normal/TruncatedNormal (defined at /usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py:1748) ]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

Original stack trace for 'conv4_2/truncated_normal/TruncatedNormal':

File "recog_nocv.py", line 97, in <module>

load_model()

File "recog_nocv.py", line 56, in load_model

nms_max_output_size=400)

File "./ssd_keras/models/keras_ssd300.py", line 288, in ssd_300

conv4_2 = Conv2D(512, (3, 3), activation='relu', padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(l2_reg), name='conv4_2')(conv4_1)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/base_layer.py", line 431, in __call__

self.build(unpack_singleton(input_shapes))

File "/usr/local/lib/python3.6/dist-packages/keras/layers/convolutional.py", line 141, in build

constraint=self.kernel_constraint)

File "/usr/local/lib/python3.6/dist-packages/keras/legacy/interfaces.py", line 91, in wrapper

return func(*args, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/keras/engine/base_layer.py", line 249, in add_weight

weight = K.variable(initializer(shape),

File "/usr/local/lib/python3.6/dist-packages/keras/initializers.py", line 214, in __call__

dtype=dtype, seed=self.seed)

File "/usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py", line 4185, in truncated_normal

return tf.truncated_normal(shape, mean, stddev, dtype=dtype, seed=seed)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/ops/random_ops.py", line 175, in truncated_normal

shape_tensor, dtype, seed=seed1, seed2=seed2)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/ops/gen_random_ops.py", line 1016, in truncated_normal

name=name)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/op_def_library.py", line 794, in _apply_op_helper

op_def=op_def)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/util/deprecation.py", line 513, in new_func

return func(*args, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py", line 3357, in create_op

attrs, op_def, compute_device)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py", line 3426, in _create_op_internal

op_def=op_def)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/framework/ops.py", line 1748, in __init__

self._traceback = tf_stack.extract_stack()"ResourceExhaustedError"は, よく学習時にバッチサイズを大きくとったときに, GPUメモリが足りなくなって発生したことがある.

Jetson NanoはCPUとGPUが2GBを共有しているので, PC版のソフトをそのまま持っていったら学習時ではなくとも"ResourceExhaustedError"が発生してもおかしくはない.

2.2 "ResourceExhaustedError"対策[4]

Jetson nano関連のエラー対策を調べていたら, こんな記事[4]があり試してみた.

config = tf.ConfigProto() config.gpu_options.allow_growth = True # 2GB x 0.2 = 400MBをGPUに config.gpu_options.per_process_gpu_memory_fraction = 0.20 session = tf.Session(config=config) tensorflow_backend.set_session(session)

やたら, メモリ関連のメッセージは吐くが, 何とか動いたー.

といっても, 1枚の画像を処理するのに, 5分くらいかかっている.

それと, メモリ使用量を見ると, CPUが1.2GB, GPUが789MBとなっており, 0.20がどう効いたのかよくわからない.

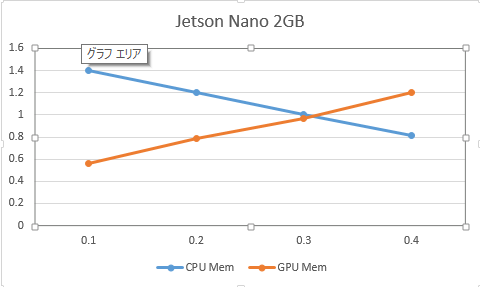

2.3 per_process_gpu_memory_fractionを調査

per_process_gpu_memory_fractionの設定により, GPUに割り当てられるメモリが変わるのか少しだけ調べてみた.

| 設定値 | CPUメモリ(GB) | GPUメモリ(GB) |

| 0.1 | 1.4 | 0.563 |

| 0.2 | 1.2 | 0.789 |

| 0.3 | 1.0 | 0.966 |

| 0.4 | 0.811 | 1.2 |

| 0.5 | - | - |

設定値が0.1増すことにより, アプリが使用するGPUメモリが10%(200MB)程度増加する感じだ.

ただ, CUDA関連のライブラリが使用するGPUメモリは設定値とは関係ないようだ.

GPUメモリをできるだけ確保できるように設定値を0.3~0.4に設定するとしても, 現状のままでは使用できない.

Jetson Nano 4GB版だとそれほど問題なく動くのだが, せっかく2GB版触り始めたので, もう少し頑張ってみよー.

次回はモデルのコンパクト化などGPUメモリ使用の削減などを考えてみようと思う.

このあたりに詳しい人がいれば, ぜひアドバイスいただきたいものです.

----

参照URL:

[1] Getting Started with Jetson Nano 2GB Developer Kit

[2] Jetson nanoの負荷の状況を表示するコマンド – CPU/GPUの使用率を見る

[3] Installing TensorFlow For Jetson Platform

[4] Out of memory error from TensorFlow: any workaround for this, or do I just need a bigger boat?